In the realm of ecological research, we frequently encounter vast digital collections of wildlife images. For example, consider the challenge of capturing photographs of each of North America's approximately 11,000 tree species. This task results in only a small portion of the millions of photographs found within extensive nature image datasets. These collections, which encompass various wildlife from butterflies to humpback whales, serve as invaluable resources for ecologists. They provide insights into unique organism behaviors, rare ecological conditions, migration patterns, and reactions to pollution and climate change.

Despite their comprehensiveness, current nature image datasets still fall short of their potential utility. Searching through these vast databases for images relevant to specific research hypotheses can be a labor-intensive process. The situation calls for the assistance of automation or, ideally, the implementation of artificial intelligence systems known as multimodal vision language models (VLMs). These models are trained on both visual and textual data, allowing them to identify finer details, such as specific tree species in the background of photos.

Evaluating VLMs for Ecological Research

But how effective are VLMs in aiding ecologists with image retrieval? A collaborative team from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), University College London, and iNaturalist conducted robust performance tests to evaluate this question. The goal? To determine how effectively these models can locate and organize the most pertinent images from their extensive “INQUIRE” dataset, which contains 5 million wildlife pictures and 250 search prompts sourced from ecologists and other biodiversity specialists.

Performance Insights

During these evaluations, the researchers observed that larger, more sophisticated VLMs, which are trained on a wider range of data, often yield better results. For straightforward visual content queries—like identifying debris on a reef—these advanced models performed reasonably well. However, they faced significant challenges when tackling expert queries that required specialized knowledge. For instance, while they could easily find jellyfish images on the beach, they struggled with more complex prompts such as “axanthism in a green frog,” which refers to a condition affecting the frog's pigmentation.

This indicates a pressing need for enhanced domain-specific training data. Edward Vendrow, a PhD student at MIT and co-leader of this project, suggests that as VLMs are trained with more targeted data, they could eventually become powerful research supporters. "Our goal is to create retrieval systems that deliver precise results for scientists studying biodiversity and climate change," Vendrow explains. "Multimodal models are still learning to navigate complex scientific language, but we project that INQUIRE will serve as an essential benchmark for assessing their progress in understanding scientific terminology."

The team utilized the INQUIRE dataset to determine whether VLMs could refine a pool of 5 million images down to the 100 most relevant matches. For example, with a straightforward query like “a reef with manmade structures and debris,” larger models like “SigLIP” successfully identified matching images, whereas smaller CLIP models lagged. Vendrow remarked on the emerging usefulness of larger VLMs for more complicated queries.

Reranking Competence

Vendrow and his colleagues evaluated how well these multimodal models could rerank the top 100 results to emphasize the most relevant images. Despite their advanced capabilities, even the largest models, such as GPT-4o, struggled to achieve high precision. Its highest score was only 59.6 percent, indicating that although improvements are on the way, there remains a substantial gap in efficacy.

These findings were presented at the recent Conference on Neural Information Processing Systems (NeurIPS), elevating awareness of these limitations.

The INQUIRE Dataset: A Deep Dive

The INQUIRE dataset emerged from extensive conversations with ecologists, biologists, oceanographers, and other experts. These discussions focused on the types of images they would seek, particularly concerning unique animal behaviors and conditions. A dedicated team of annotators spent 180 hours investigating the iNaturalist dataset to label 33,000 relevant images from approximately 200,000 candidates based on specific prompts.

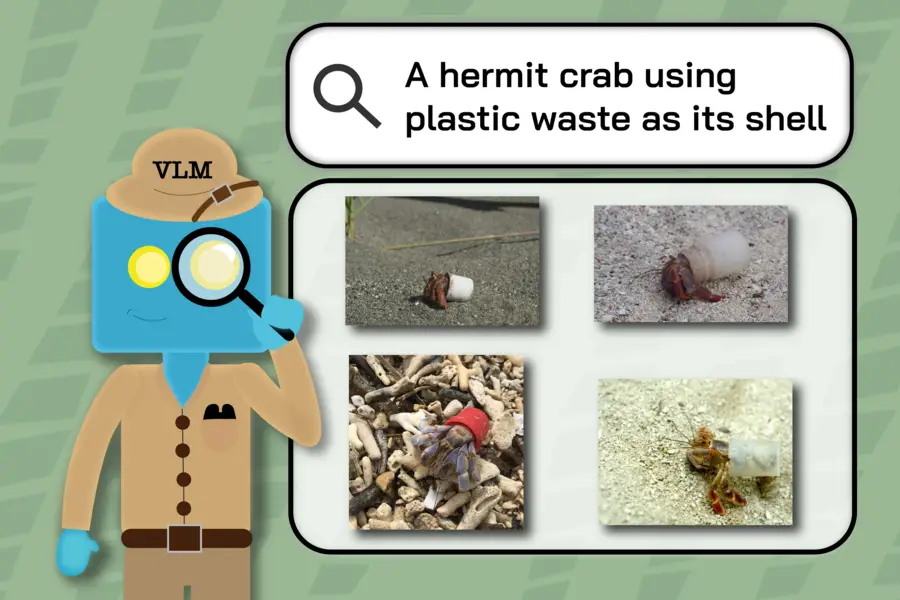

For instance, prompts such as “a hermit crab using plastic waste as its shell” or “a California condor tagged with a green ‘26’” guided annotators in isolating very specific events from the broader dataset.

The researchers tested VLM performance against these curated prompts, revealing where the models fell short in decoding scientific terminology. In some cases, the results returned items previously considered irrelevant to the search. For example, the query “redwood trees with fire scars” sometimes yielded images of trees that bore no evidence of damage.

"This careful curation of data is essential for capturing real examples of scientific inquiries in ecology and environmental science," remarked Sara Beery, a co-senior author from MIT. "The study has highlighted the current capabilities of VLMs and identified gaps in existing research that require our attention—especially regarding complex compositional queries and technical terms."

Future Prospects

The researchers aim to extend their project further by collaborating with iNaturalist to design a state-of-the-art query system that facilitates easier access to desired images. This innovative demo allows users to filter searches by species, promoting quicker and more efficient discovery of relevant results, like the distinct eye colors of cats. Vendrow and co-lead author Omiros Pantazis are also working on enhancing the re-ranking system to provide more accurate results.

Justin Kitzes, an Associate Professor at the University of Pittsburgh, lauded INQUIRE's potential to reveal secondary data. "Biodiversity datasets are quickly becoming too extensive for individual researchers to analyze thoroughly," he noted. "This study asserts the pressing need for effective search methodologies that can transcend basic queries about presence to address specific traits, behaviors, and interpersonal species dynamics. Achieving this level of precise exploration is crucial for advancing ecological science and conservation efforts."

Conclusion

Vendrow, Pantazis, and Beery, alongside a team of collaborators, produced significant findings supported by notable institutions, including the U.S. National Science Foundation and various universities. Their work opens pathways for further exploring how VLMs can revolutionize image retrieval in ecological research, ultimately enhancing our understanding of biodiversity and its conservation.